28 November 2025

AI progress and a Safeguarded AI pivot

We sat down with Programme Director David ‘davidad’ Dalrymple to unpack how his programme is pivoting and learn what lies ahead.

While our conviction in the vision for the Safeguarded AI programme remains unchanged, the pace of frontier AI progress has fundamentally altered our path – instead of investing in specialised AI systems that can use our tools, it will be more catalytic to broaden the scope and power of the TA1 toolkit itself, making it a foundational component for the next generation of AI.

What led to this pivot?

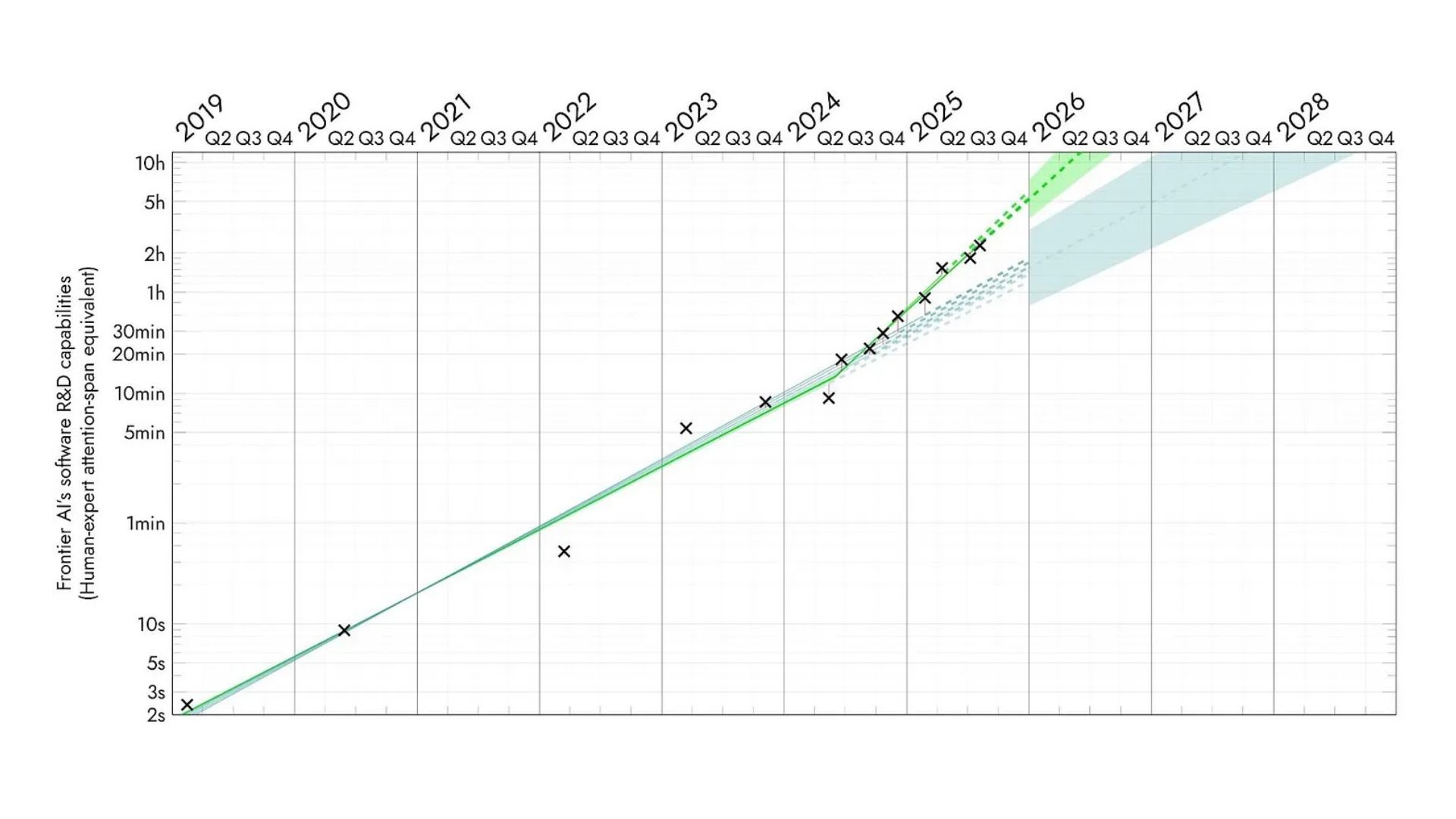

The decision was driven by the speed of progress in frontier AI capabilities. This has outpaced expectations from when the programme was designed.

If we look back over the last 12 months, every frontier model has more capability than I expected at that point in time. When METR, the nonprofit that measures capabilities, evaluated them, they quantitatively exceeded expectations in the same way. So, we’re not just updating based on one model; we’re updating based on a trend of the last four or five frontier models.

Data from ‘Measuring AI Ability to Complete Long Tasks’ METR

How did you decide that a pivot was needed?

Honestly, it didn’t occur to me that we could depart from the TA2 Phase 2 selection process so close to applications closing. But in a conversation with Nora, the Technical Specialist on the programme, and Ant, ARIA’s CTO, I mentioned that it was more likely than not we’d select zero successful teams for Phase 2 – the value of having a dedicated team pursuing ML R&D to develop the capabilities needed for the Safeguarded AI workflow seemed much lower than when we started, because we now expect many of these capabilities to come online soon by default.

Ant said, “What if we just stop it right now?” Once I realised that was a real option, I quickly agreed. We then had a call with Ilan, ARIA’s CEO, and he said, “Great, let’s do it.”

Did this experience of programme management surprise you?

One of the things I appreciate about ARIA is that they back Programme Directors to steer into new directions before the picture is complete. I’m grateful we were able to move so quickly on aborting the TA2 selection, while also having space to develop the new vision thoughtfully.

As part of the pivot, you indicated wanting to expand the scope and ambition of TA1…

Yes, instead of narrowly targeting assurance for cyber-physical system control, we are thinking about TA1 as a toolkit for mathematical assurance and auditability across a wider range of areas, including software and hardware verification, auditable multi-agent systems, and more informal knowledge.

Can you give us an example of one of those challenges?

My current leading hypothesis for a proof point for the platform we’re building is formally-verified firewall. Specifically, securing point-to-point connections over the open internet.

In critical infrastructure – power grids, water systems, radar and air traffic control – connections that once used dedicated wires now use the internet, which opens up cyber vulnerabilities. With the rise of general AI, more actors are capable of sophisticated cyberattacks.

So, part of protecting the public from AI risks is hardening critical cyber systems. DARPA proved a decade ago (through the HACMS programme led by Kathleen Fisher, our incoming CEO!) that formally verified software can be made meaningfully secure. With AI, we may be able to scale that process – faster and cheaper.

Why do these feel like the most useful application areas for these tools?

There are two different reasons. First, with shorter timelines to societal change from AI, we need to make an impact sooner. Feedback loops in information technology are much faster. Second, as AI systems become capable of autonomously executing increasingly complex and lengthy R&D tasks, we want to build a toolkit that enables high quality human oversight over those processes, while also being itself extensible by AI systems themselves.

How do you see the toolbox and frontier AI development interacting?

Frontier AI companies are eager for new, challenging tasks to train the next generation of models. A suite of tools and examples – like verifying a firewall or crypto protocol – could be invaluable for them. We’ll release the toolkit open source, so frontier AI labs can use it to advance formal reasoning capabilities.

This isn’t just, ‘can you write Python code that passes tests?’, but ‘can you write code that carries a proof verified by a trusted kernel?’ That trusted kernel also gives feedback that’s much harder for an AI system to ‘reward hack,’ since it must prove correctness for all cases – not just test cases.

It’s a win-win: if the next generation of AI models becomes more skilled at proving formal properties using our tools, those same tools will be even more valuable when those models are released.